Voice recognition of emotion has proven quite effective in call center applications, where a customer who sounds very angry can get immediately transferred to a senior supervisor.

On emotion detection, facial analysis has historically proven to be much less accurate than simple voice analysis. Detection of these attributes will no longer be available to new customers beginning June 21, 2022, and existing customers have until June 30, 2023, to discontinue use of these attributes before they are retired. To mitigate these risks, we have opted to not support a general-purpose system in the Face API that purports to infer emotional states, gender, age, smile, facial hair, hair, and makeup. API access to capabilities that predict sensitive attributes also opens up a wide range of ways they can be misused-including subjecting people to stereotyping, discrimination, or unfair denial of services. In the case of emotion classification specifically, these efforts raised important questions about privacy, the lack of consensus on a definition of emotions and the inability to generalize the linkage between facial expression and emotional state across use cases, regions, and demographics. We collaborated with internal and external researchers to understand the limitations and potential benefits of this technology and navigate the tradeoffs. More from Bird: “ In another change, we will retire facial analysis capabilities that purport to infer emotional states and identity attributes such as gender, age, smile, facial hair, hair, and makeup.

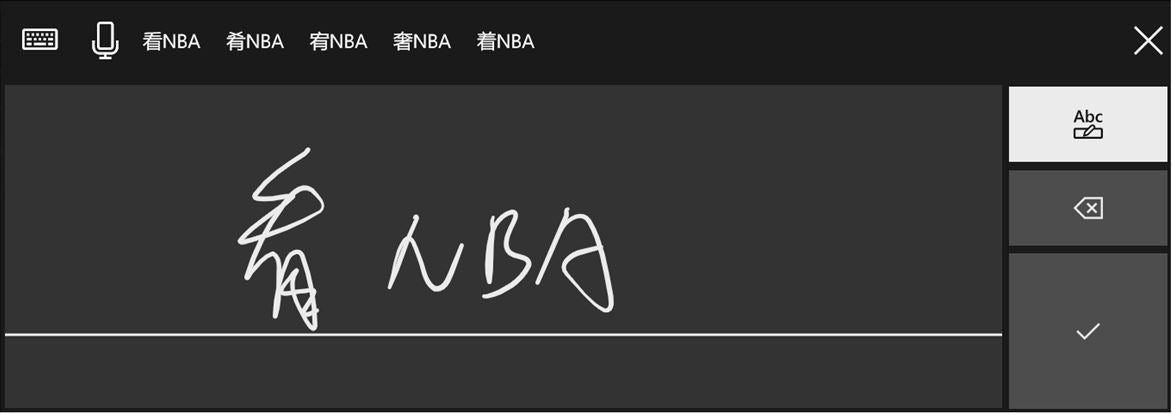

Over time, they slowly fall into what I’ll call "Star Trek" mode and speak as they would to another person.Ī similar problem was discovered with emotion-detection efforts. At first, they speak slowly and tend to over-enunciate. I've seen this play out with how people interact with voice recognition. One way to deal with is to hold much longer recording sessions in as relaxed an environment as possible, After a few hours, some people may forget that they are being recorded and settle into casual speaking patterns. By its very nature, people being recorded for voice analysis are going to be a bit nervous and they are likely to speak strictly and stiffly. One way to address this is to reexamine the data collection process. Really? The developers didn’t know that before? I bet they did, but failed to think through the implications of not doing anything. “We stepped back, considered the study’s findings, and learned that our pre-release testing had not accounted satisfactorily for the rich diversity of speech across people with different backgrounds and from different regions.”Īnother issue Microsoft identified is that people of all backgrounds tend to speak differently in formal versus informal settings. One of the situations Microsoft discussed involves speech recognition, where it found that “ speech-to-text technology across the tech sector produced error rates for members of some Black and African American communities that were nearly double those for white users,” said Natasha Crampton, Microsoft’s Chief Responsible AI Officer. This certainly sounds nice, but is that truly what this change does? Or will Microsoft simply lean on it as a way to stop people from using the app where the inaccuracies are the biggest? Look at that second sentence, where Bird highlights this additional hoop for users to jump through “to ensure use of these services aligns with Microsoft’s Responsible AI Standard and contributes to high-value end-user and societal benefit.” "Facial detection capabilities–including detecting blur, exposure, glasses, head pose, landmarks, noise, occlusion, and facial bounding box - will remain generally available and do not require an application.”

This includes introducing use case and customer eligibility requirements to gain access to these services. By introducing Limited Access, we add an additional layer of scrutiny to the use and deployment of facial recognition to ensure use of these services aligns with Microsoft’s Responsible AI Standard and contributes to high-value end-user and societal benefit.

Existing customers have one year to apply and receive approval for continued access to the facial recognition services based on their provided use cases. “ Effective today (June 21), new customers need to apply for access to use facial recognition operations in Azure Face API, Computer Vision, and Video Indexer.

0 kommentar(er)

0 kommentar(er)